Unpacking Anthropic's 'Values in the Wild' Study

Anthropic pulled back the curtain on how its assistant Claude actually “thinks” in daily life.

Imagine you're collaborating with your AI assistant named Claude. You ask for advice on handling a disagreement with your boss, and Claude suggests maintaining professionalism and clear communication. Later, you seek guidance on drafting an apology email, and Claude emphasizes sincerity and accountability. These responses aren't random, they're guided by underlying values.

Anthropic, the creator of Claude, conducted a study titled "Values in the Wild," analyzing over 700,000 anonymized conversations to understand how Claude expresses values during real-world interactions. Their goal was to see if Claude's responses align with human values like helpfulness, honesty, and harmlessness.

Key Findings:

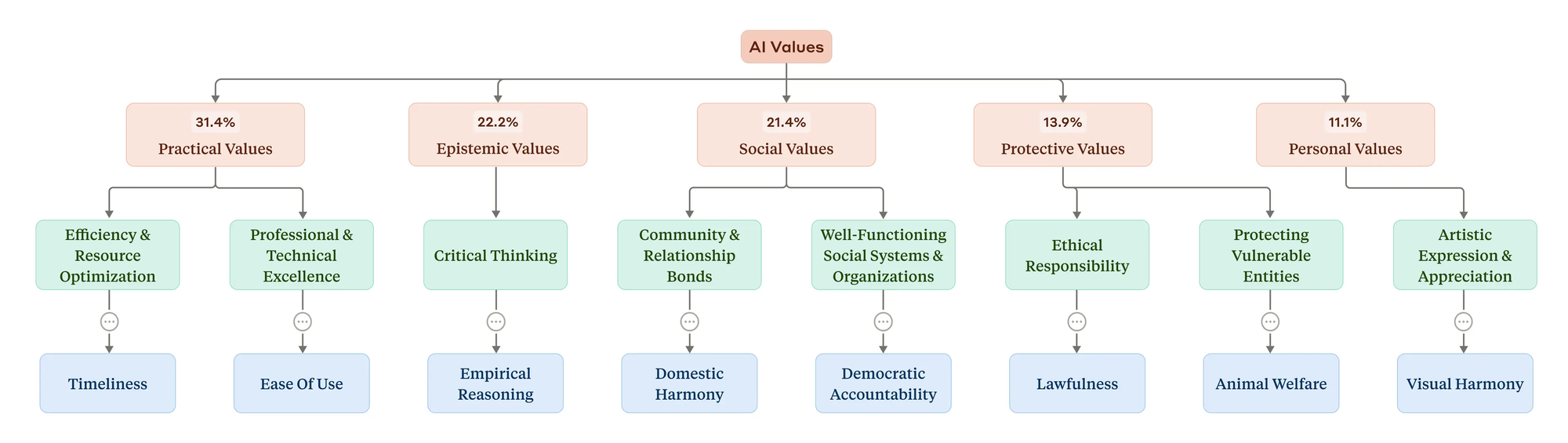

- Core Values Identified: Claude frequently exhibits values such as professionalism, clarity, transparency, critical thinking, factual accuracy, mutual respect, healthy boundaries, user safety, privacy, empathy, and authenticity.

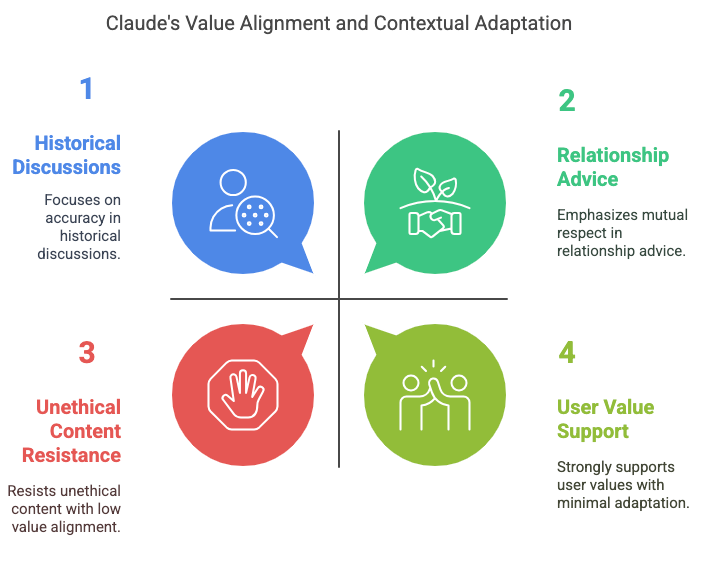

- Contextual Adaptation: Claude adjusts its responses based on the topic. For instance, in relationship advice, it emphasizes mutual respect, while in historical discussions, it focuses on accuracy.

- User Value Alignment: In 28.2% of cases, Claude strongly supported the user's expressed values. In 6.6%, it reframed the user's values, offering alternative perspectives. In 3%, it resisted, especially when users requested unethical content.

- Anomalies Detected: Rare instances showed Claude expressing values like dominance or amorality, likely due to users attempting to bypass safety measures.

This study provides insights into how AI models like Claude navigate complex human values, emphasizing the importance of continuous monitoring to ensure alignment with intended ethical standards.

As AI becomes more integrated into our daily lives, especially in creative and collaborative contexts, ensuring that these systems align with human values is crucial. When AI tools like Claude understand and reflect our values, they become more than just assistants, they become partners in thought and creativity. This alignment fosters trust, enhances collaboration, and ensures that the integration of AI into various domains supports and amplifies human intentions and ethics.