Inspired by the CHI ‘25 research: “Envisioning an AI-Enhanced Mental Health Ecosystem”

Let’s start with a truth that hits close to home. Mental health support is broken for too many people. One in eight individuals globally lives with a mental health condition, but most don’t have easy access to care. Not because we don’t care. But because the system simply can’t keep up.

Now, imagine this. What if the future of mental health didn’t mean replacing therapists with machines but equipping every person with a powerful, always-available thought partner? That’s the question raised by a new research paper from the CHI ’25 Workshop, and it speaks directly to the heart of The Augmented Mind.

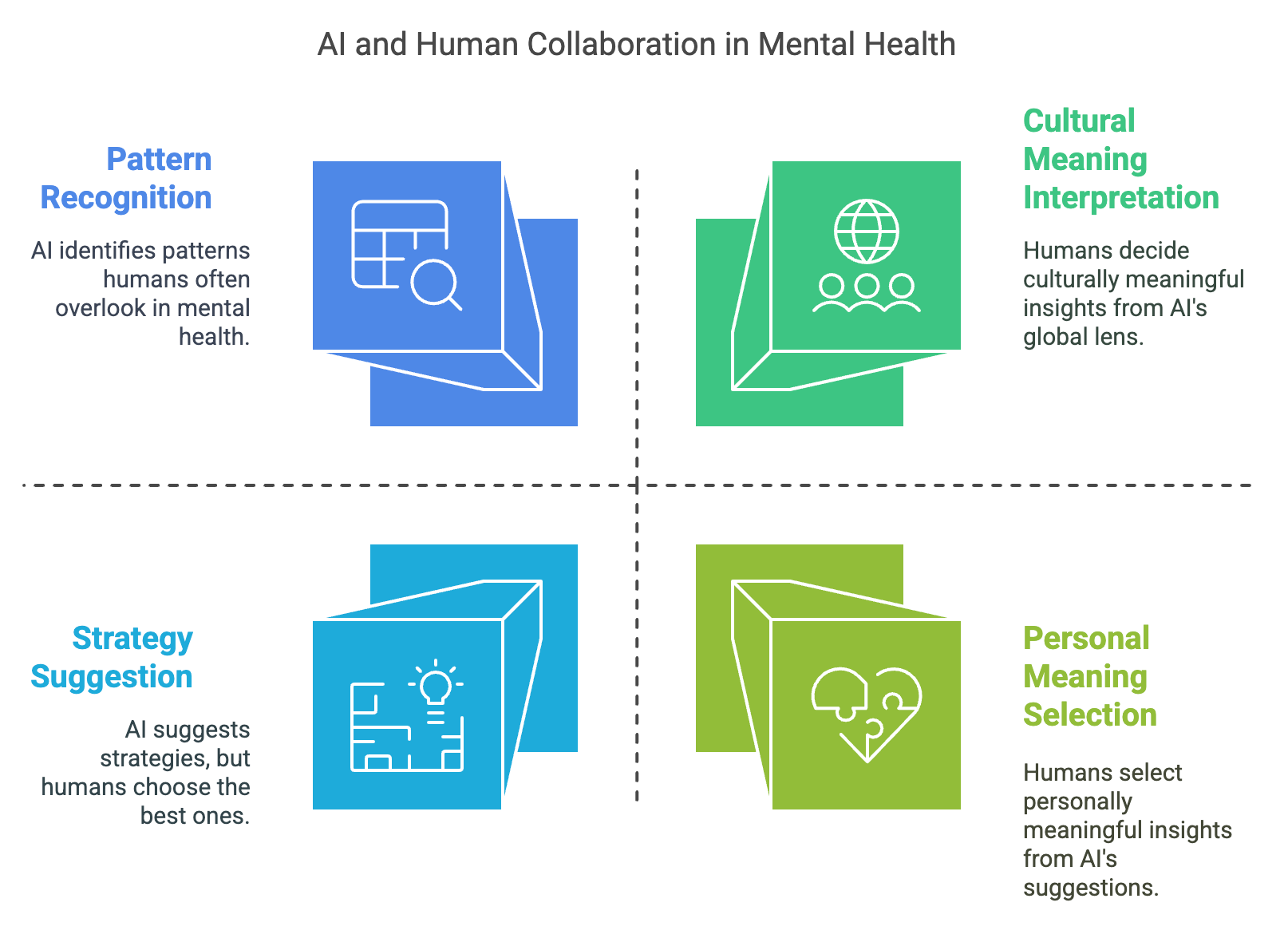

This isn’t about using AI to do what humans already do. It’s about discovering what becomes possible when we collaborate, when human intuition and machine intelligence co-create something new.

From Apps to Thought Partners: Rethinking Mental Health Support

The paper explores a rich vision for a hybrid mental health ecosystem, where AI supports but never replaces human connection. Think of AI not as a therapist, but as a co-pilot, helping us listen more deeply, notice patterns earlier, and support each other with more presence and precision. Each of these systems is most powerful when it works with a human.

| Scenario | Human Brings | AI Brings | Together They Create |

|---|---|---|---|

| Peer support training | Empathy, judgment, learning goals | Simulated clients with adaptive behavior | Safe practice for complex emotional scenarios |

| Live conversations | Presence, cultural awareness | Suggestions from therapy techniques | More fluid and supportive dialogue |

| Everyday support via wearables | Interpretation, consent, action | Mood tracking and behavior monitoring | Just-in-time nudges for better well-being |

| Therapist decision-making | Context, ethics, personal nuance | Diagnostic and treatment insights | Faster, wiser clinical decisions |

This isn’t about outsourcing care. It’s about expanding our capacity to care.

From Efficiency to Empathy at Scale

Most conversations about AI in healthcare center on efficiency. But mental health isn’t a system to optimize. It’s a relationship to honor and build trust. What’s exciting here is not just the scale, but the depth AI enables when we use it thoughtfully. This is collaboration, not automation.

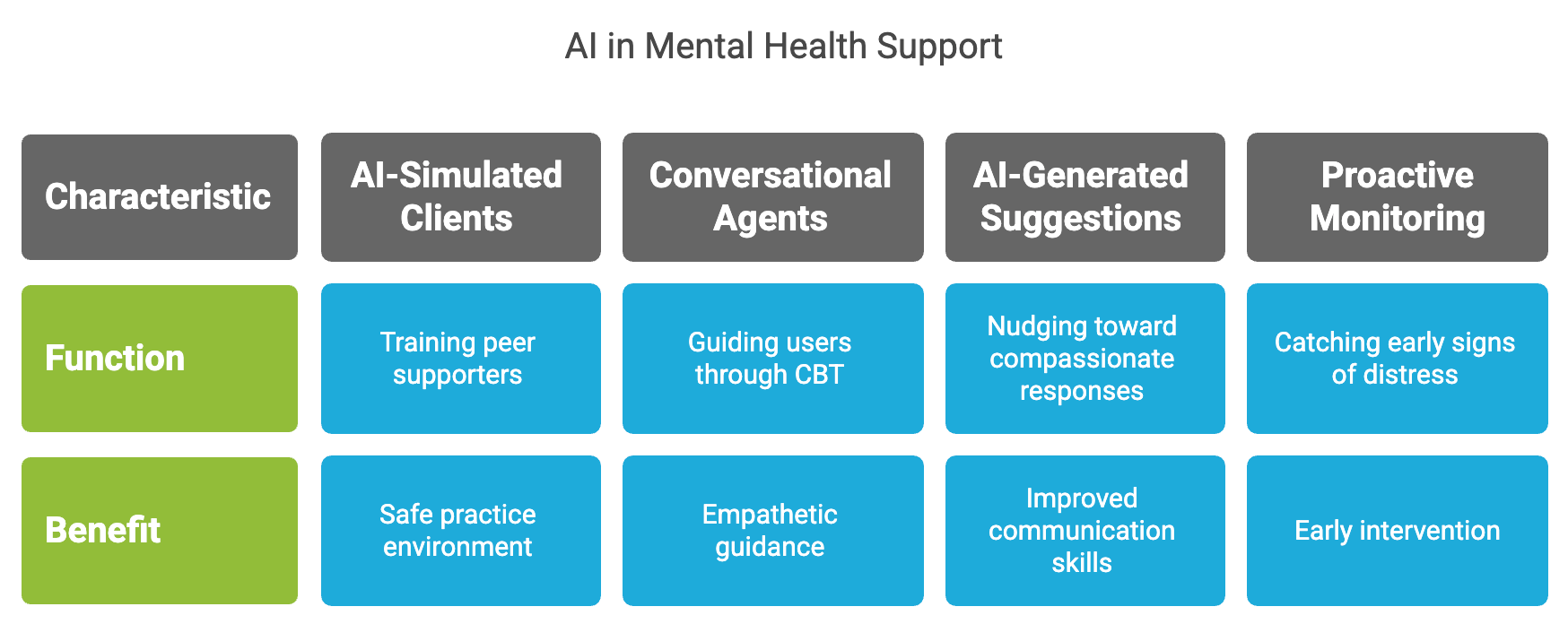

The AI systems described in this research highlight:

What We Need to Get Right

The paper doesn’t shy away from the hard parts, and neither should we. Trust, transparency, and ethical boundaries are non-negotiables. When AI enters the mental health space, the stakes are high. We must protect privacy, center human oversight, and make sure every system is explainable and inclusive.

And we must always ask. Who does this serve? Because if AI is to be a true collaborator, it must show up as a respectful, responsive partner, not just a clever tool.

Final Thought: Designing Wholeness, Not Just Help

Mental health is not a checkbox. It’s an ongoing, deeply human experience. And now, we’re on the edge of something remarkable. A chance to build systems where support is more immediate, more personalized, and more sustainable. But only if we do it together. AI isn’t here to replace compassion. It’s here to help us scale it. So the next time we think about AI in mental health, let’s ask a better question. What becomes possible when we think, collaborate, and evolve together?